Endpoints are defined as an event or outcome that can be measured objectively to determine whether the intervention being studied is beneficial.

EDC systems often ignore the importance of the definition of an EndPoint. As far as an EDC system is concerned, all data is effectively considered equally significant. [Possibly correspondents from Medidata and/or Phaseforward can correct me on how Rave and/or Inform respectively, handle this.]

Lets say in a sample clinical trial, you have 100 pages of information captured for a subject, and 10 questions per page. That is a total of 1000 data values that potential have to be captured. The capture and cleaning process typically involves the entry, review, SDV and freeze/lock. The time to perform this for a key data value is the same as the time for an item that has limited significance.

EDC systems typically use a hierarchical tree structure of status handling. Every data value is associated with a status. A Page status is reflective of the status of all the data values on the page. The visit status is reflective of all the CRF Pages in the visit etc. However, this does place a common blanket significance to all data that is captured.

It could be argued that all data that is defined as equivalent significance in the execution of a study - the protocol stated a requirement to capture the data for some reason. However, I believe it can defined at the outset the subset of information that is captured that actually contains endpoint significance. The question is - going back to our example with 1000 data values per subject - is it possible to make an early assessment of data, based on a statistically safe error threshold rather than wait until all subject, all visits, all pages and all data values are locked?

For example, let us consider efficacy and in particular efficacy in a Phase II Dose Escalation study. Information on the dosing of a subject, followed by the resulting measurements of effectiveness may occur relatively quickly in the overall duration of a trial. However, a blanket 'clean versus not clean' rule means that non of the data can be examined until either ALL the data achieves a full DB lock, or, an Interim DB Lock (all visits up to a defined point) is achieved.

So - a question to the readers - is it possible to make assessments on data even if a portion of the data is either missing, or unverified?

One potential solution might be a sub-classification of data (or rather metadata).

When defining fields, a classification could be assigned that identifies as recorded value as 'end-point' significant. The actual number of potential endpoints could be list based and defined at a system level. One Primary end-point would be supported with as many secondary end-points as necessary. A value might be classified against 1 or more endpoint classifications.

The key to the value of this would be on the cleaning and data delivery. Rather than determining a tree status based on all data values captured, the tree status would be an accumulation of the data values that fell within the endpoint classification.

So - with our example, lets say that of the 1000 data values captured per subject only 150 might be considered of endpoint significance for efficacy.  Once all of the data values are captured and designated as 'clean', then the data would be usable for immediate statistical analysis. Of course other secondary end-points may exist that will demand longer term analysis of the subject data - for example follow-ups.

Once all of the data values are captured and designated as 'clean', then the data would be usable for immediate statistical analysis. Of course other secondary end-points may exist that will demand longer term analysis of the subject data - for example follow-ups.

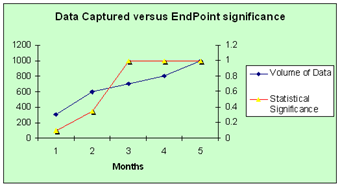

The chart models that with a typical data capture / cleaning cycle with ongoing analysis of end-point significant data - statistical significant efficacy is determined at 3 months rather than 5.

The potential value that can be gained when making early decisions has been well proven. Adaptive Clinical trials often rely on the principle. By delivering data of a statistically safe state of cleanliness earlier, we could potential greatly accelerate the overall development process.

1 comment:

First, there are no "EDC Endpoints" in a clinical trial. EDC is just a mechanism of collecting data to support the clinical trial objectives.

It is true that EDC systems view all data as the same, whether a data point supports the primary objective of the study or a secondary objective or a even a hypothesis-generating assessment. EDC applications were designed to efficiently capture and clean each of those data points with the same rigor.

That "clean all the same" capability runs into multiple forms risk-based or adaptive approaches to collecting and cleaning clinical trial data. Sponsors know which data are critical to safety and efficacy endpoints and generally develop a data management plan that defines which data will be cleaned and to what level, so they are able to make the best decision at the right point in time based on the cleanest data set possible.

In addition, many sponsors are implementing reduced SDV plans that take a risk-based approach to comparing source data to EDC entries. Those plans take on many forms but have the commonality that the day of 100% SDV may be over.

All that said, EDC systems with their clean-the-same functionality and page status features don't readily support these business processes. I have heard of sponsors who no longer use the SDV-complete status in EDC because it may reveal the reduced SDV strategy to the Investigator. Their concern is how that information might affect the Investigator's attention to detail to non-SDV data points.

I am not sure I would recommend that EDC systems be modified to flag data as primary, secondary, SDV, or non-SDV. It's hard enough to move from protocol to EDC database to study start without adding more complications to database builds. Knowing your EDC capability and your internal business processes should allow sponsors to create an EDC process which best fits their needs.

Post a Comment